Data gravity что это

What Is Data Gravity?

This simple concept helps explain the rise of cloud and edge computing within the era of big data.

Data gravity is a term coined by GE Engineer Dave McCrory in a 2010 blog post, referring to the way data “attracts” other data and services. Just as a planet’s gravity pulls on other objects, this analogy imagines that large accumulations of data are more likely to accrue even more data.

It’s a useful analogy in that it explains why both cloud computing and edge computing have come to dominate the way that data is stored and managed. So far, this theory has generally held true for enterprises seeking to manage their expanding stores of data.

What Is Data Gravity?

Some 2.5 quintillion bytes of data are generated each day, so the question of how and where to most efficiently store this data will only become more urgent. Moving huge volumes of data can be a major challenge, and often demands creative solutions. Services like Amazon Web Services’ “snowmobile,” a storage data center delivered by a semi truck, constitutes one of the more extreme measures. With this on-site option, enterprises are able to back up in a few weeks what would otherwise require years to send to the AWS cloud.

Effective data analytics and artificial intelligence rely on these increasingly massive data streams, but in order to use all this data with reasonable efficiency and speed, applications have to be close to the source. All things being equal, the shorter the distance, the higher the throughput and the lower the latency. These limitations form the basic principle behind data gravity.

Enterprises and Data Gravity

Many businesses use data to enable their operations, from internal data like financial files, to external data like weather information or statistics around customer behavior. For these enterprises, data gravity imposes certain limitations on how they do business.

Traditionally, data was generated and stored internally and on-premises. This made it easy for analytics platforms to “own” the data they were crunching. But as the amount of external data increases, applications have to migrate data from other platforms in order to analyze it. These days, businesses tend to store and access their data using hybrid solutions that include both cloud, and on-premises storage. But businesses don’t just want to store data — they want to use it.

For over a decade, data storage has been migrating to the cloud. The percentage of enterprises processing data in the cloud grew from 58% in 2017 to 73% in 2018, reflecting improved tools and scalability — and certainly contributing to further data gravity within the cloud.

But the IoT presents a new set of challenges in dealing with data, as it exists at the periphery of the network, meaning the data it produces may not be easily utilized by centralized applications in the cloud. This is especially true considering the deluge of data being produced by these increasingly critical devices. At the same time, for IoT devices in factories, hospitals, or automobiles, low latency data processing is an absolute necessity. Edge computing seeks to solve this problem by bringing data processing right to the device, avoiding the bandwidth costs and slowdowns associated with pushing data to the cloud.

Across the board, IT teams and networking solutions providers are working to enable enterprises to better use this data. IBM recently overhauled its Cloud Private for Data platform to allow customers to use analytics wherever their data is, whether that’s in an IoT device or a private cloud. And Microsoft’s “Intelligent Edge” strategy calls for enabling software to run on IoT devices, using ever-smaller processing hardware.

Managing Your Company’s Data

Enterprises are eager to generate and make good use of the data to which they have access — and for good reason. Data analytics is increasingly providing the insights they need to remain competitive. Many industries have already come to rely on the operational advantages provided by IoT devices. But just as many IT departments are struggling to effectively manage the storage and processing of all that data.

If your business is looking to overcome the limitations of data gravity, it may be time to pair with the experts at Turn-key Technologies (TTI). With decades of experience and a thorough understanding of data storage and management, TTI has the expertise necessary to help modern-day enterprises implement cloud and edge computing solutions that will help them capitalize on their data with the speed and cost-efficiency the modern marketplace demands.

Data Gravity and What It Means for Enterprise Data Analytics and AI Architectures

BrandPosts are written and edited by members of our sponsor community. BrandPosts create an opportunity for an individual sponsor to provide insight and commentary from their point-of-view directly to our audience. The editorial team does not participate in the writing or editing of BrandPosts.

New data-intensive applications like data analytics, artificial intelligence and the Internet of things are driving huge growth in enterprise data. With this growth comes a new set of IT architectural considerations that revolve around the concept of data gravity. In this post, I will take a high-level look at data gravity and what it means for your enterprise IT architecture, particularly as you prepare to deploy data-intensive AI and deep learning applications.

What is data gravity?

Data gravity is a metaphor introduced into the IT lexicon by a software engineer named Dave McCrory in a 2010 blog post.1 The idea is that data and applications are attracted to each other, similar to the attraction between objects that is explained by the Law of Gravity. In the current Enterprise Data Analytics context, as datasets grow larger and larger, they become harder and harder to move. So, the data stays put. It’s the gravity — and other things that are attracted to the data, like applications and processing power — that moves to where the data resides.

Why should enterprises pay attention to data gravity?

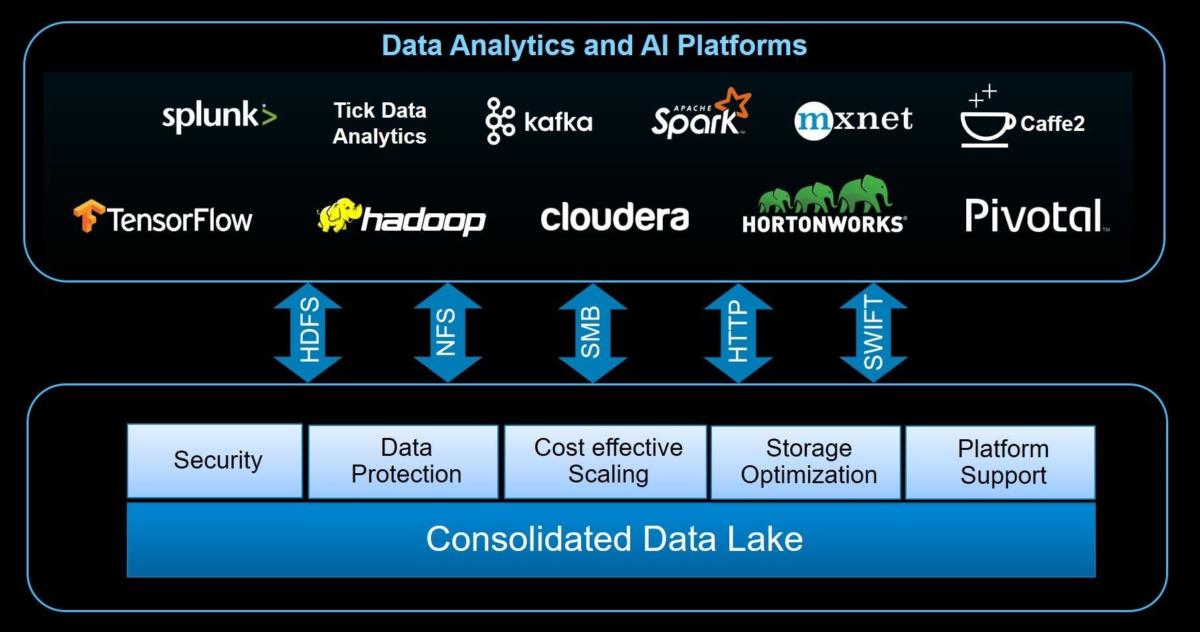

Digital transformation within enterprises — including IT transformation, mobile devices and Internet of things — is creating enormous volumes of data that are all but unmanageable with conventional approaches to analytics. Typically, data analytics platforms and applications live in their own hardware + software stacks, and the data they use resides in direct-attached storage (DAS). Analytics platforms — such as Splunk, Hadoop and TensorFlow — like to own the data. So, data migration becomes a precursor to running analytics.

As enterprises mature in their data analytics practices, this approach becomes unwieldy. When you have massive amounts of data in different enterprises storage systems, it can be difficult, costly and risky to move that data to your analytics clusters. These barriers become even higher if you want to run analytics in the cloud on data stored in the enterprise, or vice-versa.

These new realities for a world of ever-expanding data sets point to the need to design enterprise IT architectures in a manner that reflects the reality of data gravity.

How do you get around data gravity?

A first step is to design your architecture around a scale-out network-attached storage (NAS) platform that enables data consolidation. This platform should support a wide range of traditional and next-generation workloads and applications that previously used different types of storage. With this platform in place, you are positioned to manage your data in one place and bring the applications and processing power to the data.

What are the design requirements for data gravity?

Here are some of the high-level design requirements for data gravity.

Security, data protection and resiliency

An enterprise data platform should have built-in capabilities for security, data protection and resiliency. Security includes authorizing users, authenticating them and controlling/auditing access to data assets. Data protection and resiliency involves protecting the availability of data from disk, node, network and site failures.

Security, data protection and resiliency should be applied across all applications and data in a uniform manner. This uniformity is one of the advantages of maintaining just one copy of data in a consolidated system, as opposed to having multiple copies of the same data spread across different systems, each of which has to be secured, protected and made resilient independently.

The data platform must be highly scalable. You might start with 5 TB of storage and then soon find that you are going to need to scale to 50 TB or 100 TB. So, look for a platform that scales seamlessly, from terabytes to petabytes.

That said, you need to choose platforms where personnel and infrastructure costs do not scale in tandem with increases in data. In other words, a 10x increase in data should not bring a 10x increase in personnel and infrastructure costs; rather, these costs should scale at a much lower rate than that of data growth. One way to accomplish this goal is through storage optimization.

The data platform should enable optimization for both performance and capacity. This requires a platform that supports tiers of storage, so you can store the most frequently accessed data in your faster tiers and seldom-used data held in lower-cost, higher-capacity tiers. The movement of data within the system should happen automatically, with the system deciding where data should be stored based on enterprise policies that could be configured by the users.

Data Analytics and AI platforms change often, so data must be accessible across different platforms and applications, including those you use today and those you might use in the future. This means your platform should support data access interfaces most commonly used by data analytics and AI software platforms. Examples of such interfaces are NFS, SMB, HTTP, FTP, OpenStack Swift-based Object access for your cloud initiatives, and native Hadoop Distributed File System (HDFS) to support Hadoop-based applications.

These are some of the key considerations as you design your architecture to address data gravity issues when deploying large-scale data analytics and AI solutions. For a closer look at the features of a data lake that can help you avoid the architectural traps that come with data gravity, explore the capabilities of Dell EMC data analytics platforms and AI platforms, all-based on Dell EMC Isilon, the foundation for a consolidated data lake.

data gravity

Data gravity is the ability of a body of data to attract applications, services and other data.

The force of gravity, in this context, can be thought of as the way software, services and business logic are drawn to data relative to its mass (the amount of data). The larger the amount of data, the more applications, services and other data will be attracted to it and the more quickly they will be drawn.

In practical terms, moving data farther and more frequently impacts workload performance, so it makes sense for data to be amassed and for associated applications and services to be located nearby. This is one reason why internet of things (IoT) applications need to be hosted as close as possible to where the data they use is being generated and stored.

IT expert Dave McCrory coined the term data gravity as an analogy for the physical way that objects with more mass naturally attract objects with less mass.

According to McCrory, data gravity is moving to the cloud. As more and more internal and external business data is moved to the cloud or generated there, data analytics tools are also increasingly cloud-based. His explanation of the term differentiates between naturally-occurring data gravity and similar changes created through external forces such as legislation, throttling and manipulative pricing, which McCrory refers to as artificial data gravity.

McCrory recently released the Data Gravity Index, a report that measures, quantifies and predicts the intensity of data gravity for the Forbes Global 2000 Enterprises across 53 metros and 23 industries. The report includes a patent-pending formula for data gravity and a methodology based on thousands of attributes of Global 2000 enterprise companies’ presences in each location, along with variables for each location including

Data Gravity: What it Means for Your Data

Related articles

Data is only as valuable as the information it is used to create. The need for valuable, business-specific, data-driven information has created a level of demand that can only be met by maintaining vast amounts of data. As enterprises move forward, data will only continue to grow. This continual expansion has given rise to the phenomenon known as data gravity.

What is data gravity?

Data gravity is the observed characteristic of large datasets that describes their tendency to attract smaller datasets, as well as relevant services and applications. It also speaks to the difficulty of moving a large, “heavy” dataset.

Think of a large body of data, such as a data lake, as a planet, and services and applications being moons. The larger the data becomes, the greater its gravity. The greater the gravity, the more satellites (services, applications, and data) the data will pull into its orbit.

Large datasets are attractive because of the diversity of data available. They are also attractive (i.e. have gravity) because the technologies used to store such large datasets — such as cloud services — are available with various configurations that allow for more choices on how data is processed and used.

The concept of data gravity is also used to indicate the size of a dataset and discuss its relative permanence. Large datasets are “heavy,” and difficult to move. This has implications for how the data can be used and what kind of resources would be required to merge or migrate it.

As business data continues to become an ever increasing commodity, it is essential that data gravity be taken into consideration when designing solutions that will use that data. One must consider not only current data gravity, but its potential growth. Data gravity will only increase over time, and in turn will attract more applications and services.

How data gravity affects the enterprise

Data must be managed effectively to ensure that the information it is providing is accurate, up-to-date, and useful. Data gravity comes into play with any body of data, and as a part of data management and governance, the enterprise must take the data’s influence into account.

Without proper policies, procedures, and rules of engagement, the sheer amount of data in a warehouse, lake, or other dataset can become overwhelming. Worse yet, it can become underutilized. Application owners may revert to using only the data they own to make decisions, leading to incongruous decisions made about a single, multi-owned application.

Data integration is greatly affected by the idea of data gravity — especially the drive to unify systems and decrease the resources wasted by errors or the need to rework solutions. Placing data in one central arena means that data gravity will not collect slowly over time, but rather increase significantly in a short time.

Understanding how the new data gravity will affect the enterprise will ensure that contingencies are in place to handle the data’s rapidly increasing influence on the system. For example, consider how data gravity affects data analysis. Moving massive datasets into analytic clusters is an ineffective — not to mention expensive — process. The enterprise will need to develop better storage optimization that allows for greater data maneuverability.

The problem with data gravity

Data gravity presents data managers with two issues: latency and data nonportability.

Learn how Talend runs its business on trusted data

Dealing with data gravity

Data gravity is a reality of the technological times that must be handled with as much finesse as possible to keep things moving smoothly and efficiently. The biggest weapons in the data manager’s arsenal will be data management and governance, as well as masterful data integration.

Data management

Data management is a must, regardless of whether the data is stored in the cloud or on-premises. Data management allows for leverage of data gravity — how the data is going to be used, by whom, and for what purpose are all factors that will help define what applications and services need to run in the cloud with the data.

With data gravity bringing in more applications and services over time, it is essential that data integrity be maintained to provide accurate and complete data.

Data governance

Data governance is a core piece of data management. Data governance is best explained as a role system that defines accountability and responsibility in regards to the data.

This is paramount to defying data gravity issues, because it creates better quality data and allows for data mapping. Good data governance will provide its own benefit, as well as help provide better data management overall.

Data integration

Data integration is how organizations increase the efficiency of systems and applications while also increasing the ability to leverage data.

While it might seem counterintuitive to use data integration as a means of dealing with data gravity, it boils down to having one data source over many. One central source would be voluminous to be sure, but it would also mean that the data manager is only contending with one data gravity source instead of several.

The future of the cloud and data gravity

The largest drawback to data gravity is a need for proximity between the data and the applications that need that data.

For example, more and more enterprises are seeking to share their data in an effort to produce more valuable, robust dataset that would be mutually beneficial. In order to do this effectively, both of the enterprises involved would need close proximity to the data.

Enter the cloud. Enterprises across the country, or even across the globe, can achieve this proximity by leveraging cloud technology.

Cloud technology can be viewed as both a solution and a problem, however. Cloud technology has allowed for massive expansion of data bodies, which has served to increase data gravity rather than diffuse it.

On the opposite side of the coin, cloud technology serves as a means of defying data gravity by allowing enterprises scalable processing power and close proximity to the needed data. This pushes the cloud to the fore, and discourages on-premises data storage.

How to start managing data gravity

Data gravity does not have to be an insurmountable problem. Data gravity is an environmental factor that affects the world of data, but knowing about these effects allows the data manager to take control and deal with the potential fallout. Although it has few exact answers, enterprises can take steps to mitigate the negative impact of data gravity through proper data management and data governance.

Data management and governance must evolve as technology and processes become more advanced. Dealing with increased complexity can seem daunting, but having the right tools goes a long way in easing that strain. Talend Data Fabric is a suite of applications that can help tackle defying data gravity by providing tools proven in the realms of data management, governance, and data integration.

Don’t be left adrift in your data’s orbit. Take the first step in controlling data gravity by seeing how Talend Data Fabric can assist you on your way to speedy, accurate, and superior data management.

gravity data modeling

Смотреть что такое «gravity data modeling» в других словарях:

Solid modeling — The geometry in solid modeling is fully described in 3‑D space; objects can be viewed from any angle. Modeled and ray traced in Cobalt Solid modeling (or modelling) is a consistent set of principles for mathematical and computer modeling of three … Wikipedia

Anti-gravity — Antigrav redirects here. For the EyeToy video game, see EyeToy: AntiGrav. Anti gravity is the idea of creating a place or object that is free from the force of gravity. It does not refer to the lack of weight under gravity experienced in free… … Wikipedia

Polygonal modeling — In 3D computer graphics, polygonal modeling is an approach for modeling objects by representing or approximating their surfaces using polygons. Polygonal modeling is well suited to scanline rendering and is therefore the method of choice for real … Wikipedia

Journal of Physical and Chemical Reference Data — Titre abrégé J. Phys. Chem. Ref. Data Discipline Chimie physique … Wikipédia en Français

Protein Data Bank — The Protein Data Bank (PDB) is a repository for 3 D structural data of proteins and nucleic acids. These data, typically obtained by X ray crystallography or NMR spectroscopy and submitted by biologists and biochemists from around the world, are… … Wikipedia

moon — mooner, n. moonless, adj. /moohn/, n. 1. the earth s natural satellite, orbiting the earth at a mean distance of 238,857 miles (384,393 km) and having a diameter of 2160 miles (3476 km). 2. this body during a particular lunar month, or during a… … Universalium

Moon — /moohn/, n. Sun Myung /sun myung/, born 1920, Korean religious leader: founder of the Unification Church. * * * Sole natural satellite of Earth, which it orbits from west to east at a mean distance of about 238,900 mi (384,400 km). It is less… … Universalium

Non-volcanic passive margins — (NVPM) constitute one end member of the transitional crustal types that lie beneath passive continental margins; the other end member being volcanic passive margins (VPM). Transitional crust welds continental crust to oceanic crust along the… … Wikipedia

Geology of Mars — Mars Mars as seen by the Hubble Space Telescope Designations … Wikipedia

Magellan (spacecraft) — Magellan Artist s depiction of Magellan at Venus Operator NASA / JPL Major contractors Martin Marietta / Hughes Aircraft … Wikipedia

Wolf-Dieter Schuh — (* 20. Juni 1957 in Güssing) ist ein österreichischer Geodät und seit 2000 Hochschulprofessor in Bonn. Wolf Dieter Schuh studierte Vermessungswesen an der TU Graz und schloss 1981 mit einer Diplomarbeit über rationelle Algorithmen zur… … Deutsch Wikipedia